Sound Media: Part II – Backwards history

Theoretical introduction to sound media

7. Tape control: A revolution in recorded music, 1970s – 1950s

8. The acoustic nation: Live journalism, 1960s – 1930s

9. Microphone moods: Music recording, 1940s – 1930s

10. Atmospheric contact: Experiments in broadcasting, 1920s – 1900s

11. The repeating machine: Music recording, 1920s – 1870s

7. Tape control – A revolution in recorded music, 1970s-1950s

If the term ‘revolution’ is to be used at all in this book, it should describe the introduction of micro-electronics and magnetic tape in Europe and America. In the 1970s there was a wholesale uptake of synthesizers, tape effects and multi-track recording, and the musical soundscapes of the West were changed forever. It is always dangerous to proclaim revolutions, but I will present three case studies that support my argument well. Firstly the Residents play a weird, tone-generated melody in 1974; secondly, Sly and the Family Stone play a densely produced funk track in 1973; and lastly the British rock experimentalists Traffic making psychedelia in 1968. All this music is heavily edited (cut up), and has nothing to do with the old ‘live on tape’ performances of the 1950s and before. The documentary realism of live recordings was marginalized in a matter of years. This change towards densely cut up and rearranged tape is the revolution I am talking about.

Backwards history

Now the backwards history will begin in earnest, and I anticipate a level of scepticism among some of my readers. But the move backwards from the present time to the past is quite simple; I jump from the digital media of our own time to the analogue media in their final configuration before they became obsolete. All the remaining chapters discuss analogue media in one way or another.

First I will sketch the general background atmosphere during the 1970s and 1960s. The period was highly advanced in technological terms, as the NASA moon landing in 1969 demonstrates. The Cold War was at its coldest, and the USA had strengthened its position with the Apollo programme (Hobsbawm 1994: 231ff). On the cultural front grave old men had always dominated the public sphere, but now young people started making a claim to dominance. In the USA in the 1960s new rights were gained for women and racial minorities, and there was a more open and informal society, at least at the everyday level. It was a better time to five in both the USA and Europe for more people than ever before. Although bad things were happening, for example the Vietnam War, people’s living standards went up in all countries of the West (see Marwick 1998 for a comprehensive study of the cultural revolutions of the 1960s). Consumer comfort eased the strain of living with the impending threat of nuclear annihilation, and the era was marked by cultural optimism.

In 1974 the media environment was very different from ours. There were no mobile phones, no personal computers and no internet. Computers were used only in banks, insurance companies and universities. But we should not underestimate the richness and attraction of this earlier media environment.

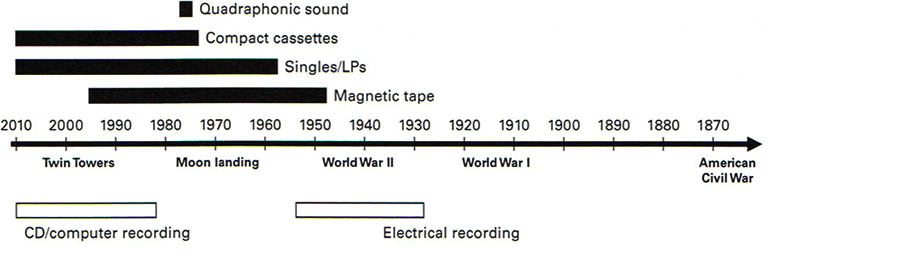

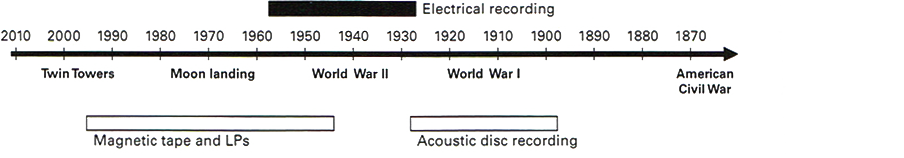

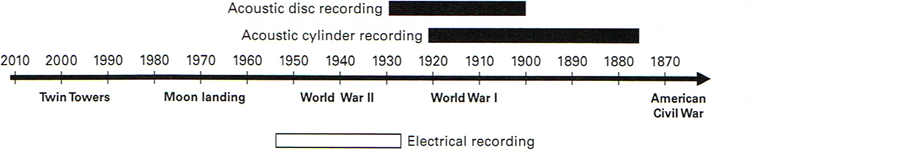

Figure 7.1 shows the platforms on which this chapter focuses in black. There were four essentially different platforms: magnetic tape, which was used mostly in professional production milieus; singles and LPs, used for the mass distribution of music; compact cassettes, used both for mass distribution and for personal recording of media sound; and the short-lived quadraphonic sound system. I will focus here on magnetic tape and the LP. Notice that LPs and cassettes are still available, although they are marginal compared to CDs and computer files such as mp3. The platforms that preceded and came after these analogue platforms are listed in figure 7.1 below the arrow. Computer recording is discussed in chapter 3, while electrical recording is treated in chapter 9.

However, there were several influential recording media in the mid-twentieth century. From the late 1920s sound film inspired creative developments in sound editing on celluloid film, and there was also advanced tape editing in radio plays and reportage quite early on. When television was introduced in the 1950s yet another platform for complex edited narratives came about (see Barnouw [1975] 1990; Winston 2005: 330fft). Although these media worked independently of each other, there were many creative inspirations between them.

For the domestic media consumer in Europe and America there was colour television, and there was transatlantic telegraphy, telephony and telex (with its ticker tape to throw at the parade). There were 3D sound films from Hollywood, dozens of radio stations on AM, newspapers, magazines and books. As well as colour 35 mm stills film there were cheap, portable cameras for people to record their family history with.

Ordinary people in the West had acquired a very relaxed and natural attitude towards the mass media and the music industry. Young people would enjoy music on the stereo, blasting it out into the neighbourhood without any worries. Starting in the mid-1960s, recorded music entered a stage of explosive cultural activity, and artists such as Bob Dylan and the Beatles put their stamp on cultural history almost as forcefully as Shakespeare did four hundred years previously. Electronic media and their noisy pop cultures were slowly recognized as an important part of the cultural heritage, not only in the public eye but also among academics (for positive and negative perspectives, see Boorstm [1961] 1985; McLuhan [1964] 1994; Ellul 1964; Barthes [1972] 1993).

Musicians were increasingly technology savvy. If an artist had success, they would be allowed increasing creative control and high-tech recording equipment. This allowed them to break with traditional ways of doing things, and increased the potential for originality with the aid of high-quality sound, stereo and tape cutting and splicing. During the years of psychedelia and counterculture there was an increasing popular acceptance of the expressive character of recording. Producers such as Phil Spector and George Martin became cultural icons in their own time (for studies of rock and popular music in this period, see Gracyk 1996; Jones 1992).

Not everyone liked the new production values. When Bob Dylan went electric in 1965 there was outrage among his fans in the folk song movement. Pete Seeger disliked the loud electrical sound so much that he said he wanted to pull out the electrical wiring system to stop the music (Shelton 1987: 302—3). This was an outcry against a certain type of sound just as much as against the commercial potential of Dylan’s turnaround. It says something about the unnerving character of the electric guitar, bass and miked-up drums.

Recording signature, 1974

Now I will begin the analysis of music production with magnetic tape. Artists could choose between two production aesthetics: shaping sound in support of a live performance image, or shaping the recording as an artistic message in its own right. Along these lines William Moylan (1992: 77) argues that ‘the recording process can capture reality, or it can create (through sound relationships) the illusion of a new world. Most recordists find themselves moving about the vast area that separates these two extremes.’ Before the introduction of tape, artists had no choice but to support the live performance, and this seventy-year long state of affairs will be described in chapters 9 and 11.

The favoured style of music in the 1960s was a composite one which had the sound of something fragmented or cut up. The artists could choose the best of many partial performances during a prolonged recording process, and construct just one authoritative master that would be exactly as the producer wanted it to be; this is what I call the recording signature. Rock music production involved a complex repetitive process, tracks being assigned and filled with layer upon layer of sound elements, and small parts of performances would be redone regardless of whether the whole band was present. Like a literary text, the recording is aesthetically and rhetorically well organized.

Some rock groups pushed the new timbres and technologies to the limit. The first case study in this chapter is by the Residents, who used electronic tone-generators and rhythm boxes to create eerie melodies on the LP Not Available (1978).They played alternative synth and drum machine rock, and are still well known among lovers of alternative rock and art music. The album is among the best in the Residents’ catalogue and was recorded four years before its release. I chose this track because it displays the equipment sound in a way that is parallel to the style of Autechre (track 11). Listeners in the 2000s may not find these sounds as eerie as listeners did in 1978.

In 1974 the production values of recorded music were very high, something that such contemporary artists as Steely Dan and Pink Floyd demonstrate. It was the peak of innovation in analogue technology, and the next step would be digital. Tape equipment had an aura of advancement, and it appeared in movies such as The Conversation (Francis Ford Coppola, 1974) and Klute (Alan J. Pakula, 1971). The Residents exposed the medium’s potential for creating synthetic acoustics, a world that only exists because of electronic tone-generators. ‘Never Known Questions’ is a weird intonation of concerns, and the words are just as redundart as the melody, making the track sound like a kind of tribal incantation.

Track 20: Residents: Sever Known Questions, 1974 (1:20).

Falling guards and winking bards are just a need today.

Falling guards and winking bards are just my needs. Okay?

Okay. Okay. Okay. Okay.

Okay. Okay. Okay. Okay.

Okay. Okay. Okay. Okay.

Okay. Okay. Okay. Okay. Okay.

To show or to, to be shown is,

A question never even not known,

By many to exist.

To show or to be shown,

A question never, never known,

not even by many to exist.

There are at least five instruments involved: piano, drums, synth/organ, horns, and a singing voice. The synths and voice all have the same wailing and hypnotizing sound, but the song after all conforms to traditional norms of melody, vocal performance and rhythm. This recording is not as extreme as the Residents’ album Eskimo (1979), which departs completely from recognizable chord progressions. In the 1980s groups such as Tuxedomoon and the Cure would have success with sounds similar to ‘Never Known Questions’.

It is not easy to hear what techniques the musicians have used. Even the most well-informed of listeners would be at pains to find out what was done in the studio just by listening to the album. We need the stories of people attending the recording sessions, we need a studio log and, most importantly, we need to know what equipment was used. Music lovers would increasingly attempt to disclose the techniques that were used in the recording studio and make sense out of all the different types of strange sounds. At the artistic end, mixing consoles and multitrack recording have become instruments in their own right. More to the point: this equipment has become an instrument in its own right. The medium of recording no longer just produces a good reproduction of musical events: ‘The technical equipment is seen not as an external aid to reproduction but as a characteristic of the musical original, employed as part of the artistic conception’ (Middleton 1990: 69).

The Residents and other artsy rock bands of the 1960s and 1970s, such as Frank Zappa and the Mothers of Invention, learnt brand new musical techniques (Watson 1993). Paul Théberge (1997: 220) argues that, in order to work creatively in the studio, ‘musicians and engineers had to acquire both a basic theoretical and a practical knowledge of acoustics, microphone characteristics, electronic signal processing, and a variety of other technical processes.’ Listeners responded favourably to the new sound. Simon Frith (1998: 244—5) claims that ‘we now hear (and value) music as layered; we are aware of the contingency of the decision that put this sound here, brought this instrument forward there. It’s as if we always listen to the music being assembled. ‘The process of modification was now an integral part of the aesthectic product, and the recording equipment was becoming as important as the musicians and their instruments. Remember that this structure of experience has now been in place for about forty years, and it difficult for us to imagine it as an untried thing. Nevertheless this is what I am attempting to do in this chapter.

As musicians and producers came to learn new techniques of producing sound, listeners came to learn more sophisticated ways of listening to that sound. Simon Frith describes the distinctly hermeneutical attitude that was taken towards these montages in order to be able to hear them as recognizably real.

I listen to records in the full knowledge that what I hear is something that never existed, that never could exist, as a ‘performance’, something happening in a single time and space; nevertheless, it is now happening, in a single time and space: it is thus a performance and I hear it as one.

(Frith 1998:211)

From the early 1970s there were few expectations of documentary realism among popular-music listeners. However, the act of listening became more complex, and now had room for new uncertainties that could create a sense that the value of musical performance as such had been weakened. But in the end the techniques of multitrack sound became so widespread and so influential that it came close to being perceived as a neutral feature, appearing to be the ‘natural way’ of doing things, and seeming to be exclusively a matter of social appropriation rather than of material determination.

Continuity realism, 1973

One expectation in particular is almost ineradicable among listeners.This is the expectation that a song has a straightforward verse and chorus, bridges, harmonies and solos, and that it lasts approximately three minutes. Technology meant that live performance was no longer required, but the concert scene had a conservative influence in this regard, and a groove or beat that is not too extreme in aesthetic terms is also required for dancing. Both disco and funk in the 1970s demonstrate the need for straight continuity very well.

My point is that the traditional organization of songs was required for cultural reasons, just as it had always been. At the great festivals of the 1960s, such as Woodstock, the music from the LPs was played live. Audiences expected to hear the songs they loved more or less as they sounded on the stereo LP, but with extended solos and other embellishments. However, there was a subtle change going on in this regard.

It has something to do with the fact that multitrack recordings didn’t need to convey a continuous event. The resulting recording could end up like the McLuhan LP, where all kinds of sounds were mounted on top of each other in a flow that bears little resemblance to traditional performances of speech or song. Hearing multitrack music, the public could not as easily relate the qualities of the finished piece to traditional musical qualities, such as good improvisation skills, a good mood in the band and other human aspects always associated with live performance. Among musicians there was an increased tolerance towards experiments on the part of the engineers, original editing and mixing decisions, and all kinds of experimentation with pitch, echo, reverberation, and so on. In short, the band might not be able to pull off a good live performance even if they wanted to. They had become good at something other than being a tight combo. In 1966 the Beatles stopped touring, and one of the reasons given was that it was impossible to produce live performances of the songs on Revolver (1966) and later Sgt Pepper’s Lonely Hearts Club Band (1967).

The obvious way to avoid this dilemma was to use post-production techniques to create an extra rich and varied continuity recording. The next case demonstrates this strategy well. Sly Stone was an African-American vocalist and bandleader well known to lovers of funk and rhythm and blues. One of his best LPs is Fresh (1973). Here Sly and the Family have created a series of densely layered songs which are nevertheless quite straightforward sounding, and comfortable to listen to. The album was recorded on eight- or sixteen-track stereo tape.

Track 21: Sly and the Family Stone: If You Want Me To Stay, 1973 (1:26).

If you want me to stay

I’ll be around today

To be available for you to see

I’m about to go

And then you’ll know

For me to stay here I’ve got to be me

You’ll never be in doubt

That’s what it’s all about

You can’t take me for granted and smile

Count the days I’m gone

Forget reaching me by phone

Because I promise I’ll be gone for a while

When you see me again

I hope that you have been

The kind of person that you really are now

You got to get it straight

How could I ever be late

When you’re my woman takin’ up my time

How could you ever allow

I guess I wonder how

How could you get out of pocket for fun

When you know that you’re never number two

Number one gonna be number one

I’ll be good

I wish I could

Get this message over to you now

The Family Stone was a large band, with at least ten different instruments and almost as many musicians. There is a trumpet, several saxophones, drums, bass, electric organ, electric guitar and piano, plus of course Sly’s singing voice. The lyrics are easy to sing along with, in contrast to the Residents’ track. The group of musicians is expressive and energetic, typical of early funk (Danielsen 2006).

Sly Stone is at this point in his career on a downward spiral of drug use and other standard rock-star behaviour, but this has not weakened his performance. He has a powerful and characteristic voice, which flexes and flows along with the dense accompaniment. Sly sings quite cynically about male—female relationships, and avoids political topics of his day such as the Vietman War, the liberation African Americans and women, etc.

My main reason for presenting ‘If You Want Me to Stay’ is that it is a good example of continuity realism. Like most musicians Sly was economically dependent on going on tour to promote his records and earn money. As suggested, the live concert scene required a kind of conventional performance of the kind Sly’s funk song is fully capable, while still exploiting the new production techniques to the full. In contrast, Stevie Wonder, on Innervisions (1973), camouflaged the extreme discontinuity of the recording process. He actually plays all the instruments on the track ‘Jesus Children of America’, and also sings all vocal parts and has arranged and produced the album himself, but it sounds like a full band of musicians playing enthusiastically together. This all goes to show that complex production can be arranged so that it becomes inaudible, and the unsuspecting listener would never hear it as the cut-and-paste production of a one-man band.

The strategy of continuity realism involves a tacit understanding between the producer and the listeners: the more the producers camouflage the multitrack characteristics of the recording, the more the listeners will acknowledge the musical qualities as authentic and skilful. This is inaudible recording: it tries to hide the discontinuity of multitrack production techniques and to pose as old-fashioned ‘live on tape’ recording.

Flaunting the montage, 1968

Psychedelic music displays well another approach to the magnetic tape medium. As I have stated, it had so many opportunities for post-production that continuity realism no longer had to apply. This feature was of course willingly exploited, for example in an extreme way by the Beatles on ‘Revolution no. 9’ (1968), and in a beautiful way by the Beach Boys on ‘Good Vibrations’ (1966). However, in purist milieus such as classical and folk music there was considerable consternation over the new production methods. They were considered radical and destructive.

Traffic was a rock group from England, and it is still well known to fans of 1960s music. The next case study is rather obscure, although it can be found on Traffic’s breakthrough album Mr Fantasy, issued in 1967, the summer of love and psychedelic music. The British invasion was over, although several bands – the Beatles, Cream, the Who and Steve Winwood’s Traffic – survived the change in music trends.

Track 22: Traffic: Giving to You, 1967 (1:15).

Listen baby, do you see this town … baby someday this can all be yours. Hey, Sweetheart … I mean … you know … I mean … it’s like … you know … it’s jazz man … I dig jazz … it’s got … its like … it’s jazz … is … is … you know … where it’s at … you know where I’m at … I mean jazz.

The group only has three members, who play all the instruments and contribute all the vocals. It sounds as if there are three different voices, electrical guitar, bass, drums, electric organ and flute. It is difficult to say how many instruments there are, since of course one musician could be playing several different instruments on the completed recording. The piece begins with a soft electric guitar that is drowned out by the montage of simultaneous bits of speech and scatting. The babbling is mixed up loud, but an up-tempo beat with an organ lies underneath and rises slowly as the recommendation of jazz progresses. With an electronic beep the jazz’ takes over, and three minutes of polished jazz flute follows. My excerpt fades out after a minute, but at the end of the piece the dense babbling comes back, and suggests that the element of continuity realism during the jazz proper is really dependent on the new multitrack regime.

The first part of ‘Giving to You’ is an exemplary piece of multitrack tape music from the mid-twentieth century; it resembles track 1.1 with Marshall McLuhan, and is archetypal of early multitrack productions. This piece is primarily a texture of sound, and only secondarily is it a reference to a set of external events. The kind of voice manipulation used became more normal in the wake of Elvis Presley’s early recordings:’Elvis Presley’s recorded voice was so doctored up with echoes that he sounded as though he were going to shake apart’ (Chanan 1995: 107). In sum the listener is presented with a highly realistic impression of something impossible, what Evan Eisenberg (1987: 109) calls a ‘composite photograph of a minotaur’.

But, in contrast to the Residents’ track, it is quite easy to hear that the musicians are cutting up and splicing tape at full tilt. The so-called cut-and-splice technique which is used at the beginning of Traffic’s ‘Giving to You’ was more widespread in film production and radio reportage. Such techniques lay the sound signal bare in a way that the gramophone could never accomplish. The reels and tape and wiring opened up recorded sound so that it could be touched and handled at length.

The magnetic recording medium

I will now discuss the technological basis of the new music culture. At one level there was nothing new. The magnetic recording medium was completely asymmetrical, just as the gramophone medium had been. Of course it inherited the structure of the industry, with professional distribution of music to dispersed listeners.

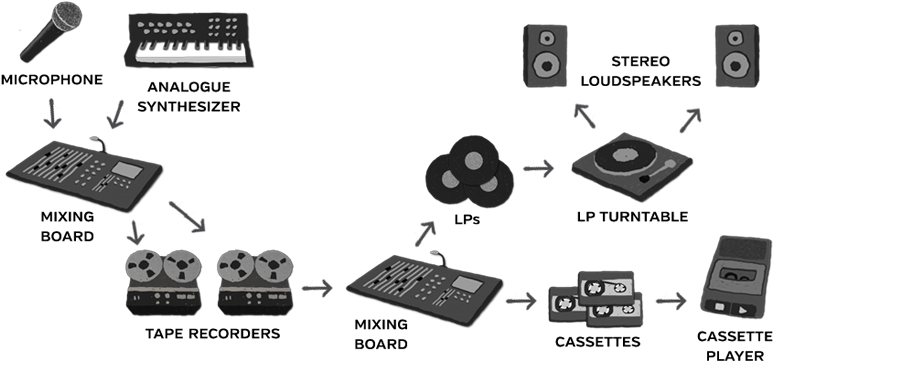

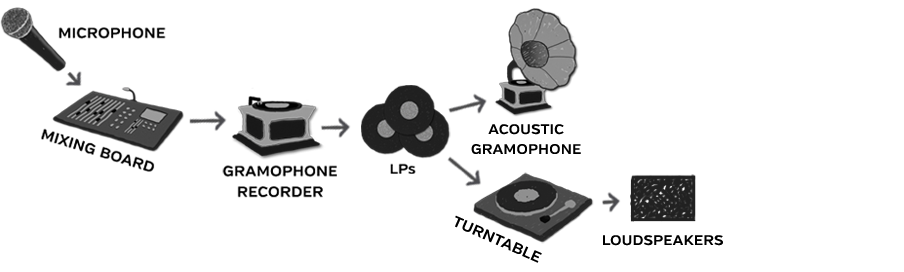

Figure 7.2 displays the main interfaces, platforms and signal carriers of the set-up I am investigating in this chapter. The recording studios were more complex than before, and there could be several multitrack tape recorders and mixing boards. The studio involved not just singers and musicians playing into a microphone, but also analogue synthesizers such as the Moog. The complexity of the medium is further shown by the fact that three different recording platforms were involved in the communication between producers and listeners: the professional tape system in the studio, plus the industrially copied singles and LPs and the industrially copied cassette tapes. The domestic stereo set with two rather big loudspeakers, a turntable and an amplifier was to dominate music consumption for twenty years, from the 1960s to the 1980s.The cassette player was often in mono only, and was typically not used for high-quality listening experiences, but it was portable and offered a far more versatile way of listening to music than the LP stereo set.

All the platforms required electrical amplification. This meant that the LPs and singles were unplayable on the mechanical gramophones from the 1950s and before, and these playback devices quickly became obsolete. As the gramophone recording industry adapted to the magnetic production platform, there arose a distinct difference between the production equipment, with its enormous creative scope, and the domestic equipment, with its limited possibilities but high-fidelity experience. This was a process of professionalization, with a difference arising between the technical skills of producers and musicians on the one hand and the enjoyments of music lovers on the other.

The analogue signal carrier is a crucial feature of this medium. In contrast to the chapters of part I in this book, part II deals only with analogue recording, and this concept must be explained. To say that a signal is analogue means that the electro-mechanical movements for storing the signal are continuous with the vibratory movements at the microphone. The duration of the recording is physically proportional to the distance along the groove or tape band, and if the speed is not kept the same during playback as it was during recording the analogy will be lost. Analogue sound is also degenerative, meaning that it cannot be stored for a long time without deterioration, or copied to new discs or tapes without noise being added.

It is worth mentioning that magnetic tape is a very tactile material, and engineers worked with a hand—ear coordination that bypassed vision. The tape has other interesting qualities. While one second of sound on a 78 rpm record was contained in a little less than one lap along the circular groove (77 per cent of one lap, to be exact), one second of tape at 7.5 inches per second (ips) was contained in a straight strip the length of a grown man’s hand from wrist to fingertip (Gelatt 1977: 299). While the 77 per cent of a lap on the turntable was virtually untouchable, the 7.5 inches of tape could be pulled out from the reel, cut free from the rest and stand alone as a tangible container of that second of sound. Consequently, the producer had no problem handling elements as short as one-tenth of a second. This kind of manipulation was impossible with the gramophone, where, as Roland Gelatt (1977: 299) points out, any splitting up of the recording would result in its destruction.

Gelatt sums up the developments in tape by comparing it with the older gramophone disc cutter, which had dominated the recording business up to that point: ‘A compact, lightweight reel of tape would play uninterruptedly for half an hour; a bulky and heavy record for four minutes. Tape did not wear out; records showed evidence of real deterioration after thirty or so playings. A broken tape could be spliced quickly and easily by anyone; a broken record could be tossed into the trash basket’ (Gelatt 1977: 288). And he points out that the maximum frequency response of a sound recording had been extended to a range of 20 to 20.000 Hz (ibid.: 299). It seems that the electromagnetic analogue could contain more of everything that was valuable – longer performances, more frequencies and more loudness – and in addition it became less degenerative.

By the late 1950s the tape machine had been improved from one track to two track, and this separation of tracks along the length of the tape made it possible to introduce stereo to the mass market (in combination with stereo pickups and new amplifiers). The two strands of sound had to be kept separate all through the mediation process, starting with two microphones routed through two channels on the mixing console to two separate tracks on a tape recorder. For playback the listeners needed an LP with two separate signals engraved and picked up by two pickups and amplified to two loudspeakers.

Michael Chanan (1995: 144) describes how frequently the electronics industry launched new versions of the tape technology. Indeed, it resembles the way in which that computer software is regularly updated. Companies such as Nagra and Ampex introduced multitrack recorders, first with two tracks, then with three and four, before 1960. During the 1960s eight- and sixteen-track machines became industry standard. For each track there had to be a dedicated play- and recording-head, and on the tape itself there had to be guard bands to prevent sound from spilling over from one track to another. Furthermore, each play-head had to function independently from the others, so that, for example, one could record the drums and bass on two tracks without recording anything on the other two, and later record on the latter without recording on the former.

The functionalities of tape were convincing as far as the music industry in the USA was concerned. From 1947 there was a landslide towards tape in the radio industry, and magnetic recording soon became a standard tool for producing documentaries, for delaying the airing of programmes, and for storing sound in archives/The old disc recording instruments previously used for broadcast transcriptions were unsentimentally abandoned. In a short and significant contest, tape had prevailed decisively’ (Gelatt 1977: 288). The same dramatic replacement soon took place in the music recording industry, at least in the USA. ‘Tape’s invasion of the recording studio, begun early in 1949, proceeded so implacably that within a year the old method of direct recording on wax or acetate blanks was almost completely superseded’ (ibid.: 298-9).

Proliferation of techniques

The most striking new technique was that of the producer star. From the time of Thomas Edison the sound engineer had been an equal partner with the performers, meaning that sound quality had just as great an influence as musical quality on how things were to be done in the studio. Engineering was not recognized in a positive, creative way until the advent of advanced recording processes in the 1960s. Before then, the engineer spent all his time improving the signal chain instead of aiding the artist to take advantage of creative potentials at the interface.

By now album sleeves referred to engineering as the craft of securing the best possible sound quality, while production was the craft of making aesthetic decisions about mixing and editing processes. And, increasingly, the musicians were their own producers. Steve Jones describes the double achievement: ‘The evolution of recording equipment moved … toward [both] greater accuracy in the preservation of the space and time of the event and greater flexibility in subsequent manipulation of that event’ (Jones 1992: 47-8). The creative artists were at the helm of this two-pronged innovation.

Editing is a very important new technique. As I have described, tape could be recorded on, rewound and played back immediately without noticeable detriment to sound quality. Such immediate playback was crucial to the art of multitrack recording, as it allowed the recording to be built up using layers of overdubs. ‘On the horizontal, one moment of sound could be followed by another that did not follow it in real time; on the vertical, any sound might be overdubbed with another that was recorded at a different time’ (Gracyk 1996: 51). In order to edit a single track without destroying the signal on the other tracks the technique of ‘dub editing’ or ‘punching in’ or ‘flying start’ became the norm (Chanan 1995: 144). For example, if two seconds of drumming had to be redone, but the guitar and vocal tracks were to be untouched, the tape would be wound to the right position, the faulty performance would be erased, and the new drum part would be punched in.

There were also new techniques for musicians. Studio musicians and band members would know in advance that their contribution was only partial, and that it would probably be used in a different way on the finished master. A guitarist might have a three-second contribution to a given song. Paul Théberge (1997: 216) says that ‘individual performances became less important than the manipulation of individual strands of recorded sound material.’ Studio musicians first supplied the raw material for the recording, and subsequently the producers did the job of making it all fit together. In many cases the musicians were reduced to the role of manual workers in a production controlled by one person, for example in the case of Roger Waters and Pink Floyd or Frank Zappa and the Mothers of Invention.

The techniques of balancing or mixing the sound also gained a new complexity. In the studio the microphones were placed in optimal relation to the sound source, and the signal was routed into the mixing console in the control room. Here the mixing engineer would monitor all sounds and adjust parameters such as frequency response, reverberation and volume. This set-up had basically been in place since the late 1920s, but now each microphone (or electronic source) could also be assigned to a separate track on the multitrack tape machine. Mixing would generally refer to the later stages of recording, where the basic tracks were calibrated in relation to each other on each pertinent parameter, and when everything was just right it would be recorded on a master stereo tape. In the gramophone era there was no such thing as the ‘later stages’ of recording.

Home stereo

On the domestic end of the medium there was a significant change of equipment, and increased interest in the technologies of sound. The LP was ushered in on the market in the late 1950s, and it could contain up to twenty minutes of music on each side. This was in itself a striking improvement, especially for lovers of classical music, who could now hear much longer stretches of a symphony without interruption (Millard 2005: 204ff).

In the 1970s young adults bought their own stereo systems, and they had more powerful amplifiers and better loudspeakers than before. The term hi-fi freak denotes people who are obsessively concerned with their technical setup and often less concerned about the music. People could play their good sound very loudly, and gather with friends to enjoy the music. Stereo sound soon became a popular new fixture of the home (Chanan 1995: 94).

Stereo means that two different tracks are separated through the recording and playback process, and different sound sources (instruments and vocals) can be placed in the different channels. I will return to Traffic (track 22) from 1968 to demonstrate the point. Traffic placed some instruments entirely in the right and others entirely in the left channel; for example the flute is in the left channel. This seemingly crude mixing is a symptom of how new stereo was. The musicians and producers had not really found a good way of using stereo, so they separated everything fifty-fifty in the two channels. Early Beatles recordings, for example Help (1965), also had very crude stereo effects.

In the 1960s stereo records were often played on simple mono equipment, and none of the stereo effects could be heard. Those who actually owned a stereo system with two speakers would notice that the music was more powerful and impressive in the wow. ‘Essentially’, Roland Gelatt argues, ‘stereo aimed at reproducing the spaciousness, clarity, and realism of two-eared listening’ (1977: 313).’Because the sonic image emitted by each speaker differed in slight but vital degree, an effect was re-created akin to the minutely divergent ”points of view” of our own two ears’ (ibid.: 314). He goes on to say that that no one hearing stereo tape recordings for the first time could fail to; be impressed by ‘their sense of spaciousness, by the buoyant airiness and “lift” of the sound as it swirled freely around the listening room’.

In chapter 3 I discussed the analytic attitude of the music lover in the 2000s, when the synthetic sounds of computers had supplied new variables to listen to. In the 1960s it was stereo that supplied new variables. The listener could now balance two signal outputs to his own liking, steering the sound towards a sweet spot by loudspeaker placement as well as electronic balancing on the amplifier. This allowed people to listen to music in a new way, ‘savoring its breadth and depth as well as its melodic outlines and harmonic textures’ (Gelatt 1977: 314-15). If the listeners were seated in a fixed position in their living room, the sweet spot, they could locate different sound sources in the recording on a horizontal line between the left- and right-hand side of the stereo system.

Stereo created an image of better sound also in the commercial sense of the word. The record company rhetoric continued to be one of superlative recommendation of a new sound experience, just as with electrical recording in the 1930s and with CDs in the 1980s. In 1960 RCA Victor stated that what they called ‘Living Stereo’ creates a ‘more natural’ and ‘more dimensional’ sound: ‘For the first time, your ears will be able to distinguish where each instrument and voice comes from — left, right or center. In short, enveloped in solid sound, you will hear music in truer perspective.’ In the 1970s Quadraphonic surround sound, which relied on four separate channels throughout the recording and reproduction process, was also introduced. For various reasons this initiative failed to enter the mainstream markets (see Harley 1998: 290).

Stereo loudspeakers could be seen as a way of making recorded sound even more realistic than before, since it resembles human bidirectional hearing. But in perceptual terms this notion was problematic. The problem had long been encountered in movie production. In 1930 a film sound technician, frustrated by the spatial signature resulting from the use of multiple microphones, described the blend of sources as creating a listener construct ‘with five or six very long ears, said ears extending in various directions’ (Airman 1992b: 49). Instead of realism, what was added was a bigger and more impressive spatiality, noticeable in the greater precision of sound placement and the greater dynamics of volume. But there was no increase in the documentary realism of sound. Evan Eisenberg points out:

That many listeners are still uncomfortable with stereo is evident from the way they place their speakers: pointlessly close together, or else on opposite walls. The latter arrangement is even more comfortable than monophony, as it creates no focus of attention, so no illusion of human presence, so no disillusionment.

(Eisenberg 1987:65)

From being relatively true to the central perspective of sitting in the best seat in the concert hall, recordings were increasingly catering to a subjectively pleasing experience for listeners. The aesthetics of recording turned away from the ideal of perfect reproduction of performances at the microphone, and further towards an ideal of perfect balance of sound at the loudspeaker.

There is an interesting environmental dimension to stereo sound. Glenn Gould, writing in 1966, argued that domestic ‘dial twiddling’ ‘transforms that work, and his relation to it, from an artistic to an environmental experience’. ‘The listeners’ encounter with music that is electronically transmitted is not within the public domain’, Gould claims. ‘The listener is able to indulge preferences and, through the electronic modifications with which he endows the listening experience, impose his own personality upon the work’ (Gould 1984: 347). He elaborates on the sense of intimacy that high-quality recording gives: ‘The more intimate terms of our experience with recordings have since suggested to us an acoustic with a direct and impartial presence, one with which we can live in our homes on rather casual terms’ (ibid.: 333).

8. The acoustic nation – Live journalism, 1960s – 1930s

If there is such a thing as an enlightened public, in the mid-twentieth century it was informed more by sound than by light. Television was not established, and radio was a paternalist voice in the life of the West. This was the golden age of sound radio, and it could reach 50 to 60 million people in countries such as the UK, France and Germany, and even more in the USA. In 1969, Americans owned 268 million radios (Schafer [1977] 1994: 91).

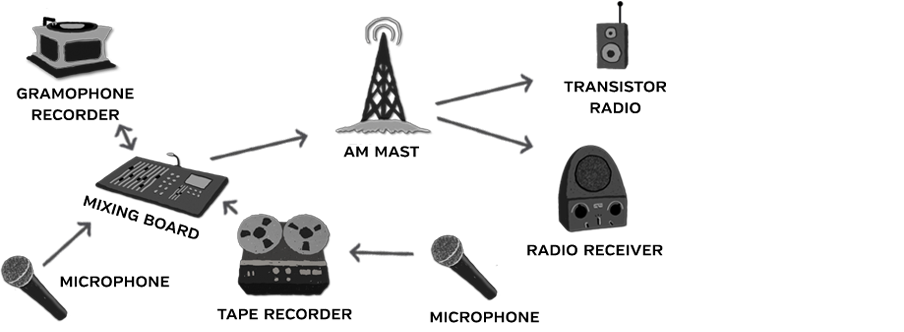

I will tell the story of radio’s auditory rhetoric by providing case studies of dominant broadcasters over a time span of thirty-eight years and going into the production procedures of NASA, NBC, the BBC, CBS and Swedish Public Radio. All had a conservative production culture that focused on live programming. This was easy to exploit for nationalistic purposes, and every nation of the world did so.

Backwards history

The ideological setting for broadcasters was challenging. Graham Murdock (2005: 219) argues that European governments were fearful of popular insurrections m the 1920s and 1930s, and in response they set out to make the nation the primary source of social identity. The BBC and other national broadcasters played a particular role in this symbolic nationalization. Looking at the world stage from the 1970s and backwards, there was a constant state ot conflict – in the post-war era between the free world and the communist world, and before that between the Allies and the Axis during World War II. Broadcasting had been used as a large-scale propaganda tool also before the war, in Germany in particular, where Adolf Hitler had the dark genius of Joseph Goebbels to guide him. There was a constant war of words in sound radio (Briggs and Burke 2002: 217).

During the war the public mood in European countries and North America was very tense, ranging from fear and hatred to self-confidence and pity. In Europe at least, citizens had to endure a blackout every night for close on six years. The desire for safety and comfort within the home was strong. Radio had a double function in this regard. It was an imposing presence that could bring bad news – for people in Europe there was a great chance that such news would affect them directly (being enlisted in the army, having to flee from imminent bombing, more death and no end in sight) – but it was also a comforting presence bringing music, entertainment and the good mood that broadcasters tried to spread among the population. There are many studies of radio’s role during the war; see, for example, Barnow 1968; Briggs 1970; and Bergmeier and Lotz 1997.

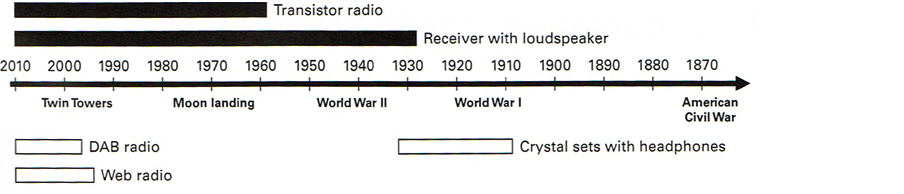

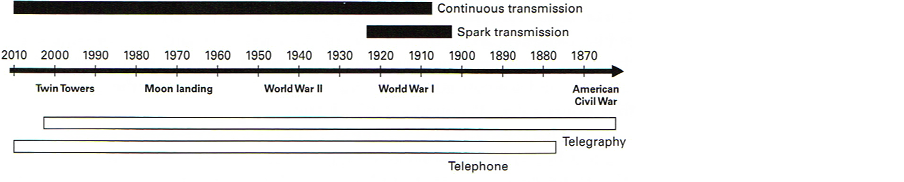

As the black boxes in figure 8.1 indicate, this chapter deals with a remarkably stable period in broadcasting history. From the mid-1920s all the way up to the 2000s people listened to analogue radio with loudspeaker receivers, represented either by big cabinets placed in the living room or by portable transistor radios. Essentially, people didn’t have to buy new receivers unless they broke, although FM radio was introduced in the 1960s and induced many people to buy new equipment. Notice that the crystal set is placed under the arrow, because it really belongs to the pioneering age, and not to the mature radio medium that this chapter will describe.

During this long period radio was firmly established as a popular medium in Western countries. These forty years of development in media also spawned television, telex, sound film and radio. After the war there was a great increase in the production and purchase of consumer electronics, because parts of the huge production capacity that had been developed to fight the war was redirected to the needs of civil society (Chanan 1995: 92).

If we go all the way back to the pioneer days in the 1920s, when the BBC and other early broadcasters were established, not even radio was a proper media environment. There was less of it in time per day, and therefore it was a scarcer communicative resource. More importantly, listening techniques were less rooted in the cultural and domestic environment than they are today. The whole form of communication was less ordinary and more serious than it is now. Although there were great advances in every direction, this impression of radio as a spectacular new medium lived on until television took over from it in the 1960s.

During this period it dawned on the industries of culture that wireless was becoming a new profession alongside the old ones, such as newspaper journalism and movie making, and this meant that it was more attractive to culture workers. As employees in the new medium gained experience and self-confidence they continued to perfect the new craft with a sensitivity and common direction lacking before. From this early approach there emerged a skill with an expressive identity of its own: radio journalism.

Sounds from the moon, 1969

Before going into the national sounds of radio, I will present a case of global broadcasting. In the 1960s it became normal for broadcasts to have several hundred million people listening or watching, a good example being the transmission from John F. Kennedy’s funeral in 1963 (Dayan and Katz 1992: 126). The USA showed its superiority in both technology and mass mediation with the NASA moon landing in 1969. It was called ‘the greatest show in the history of television’, and was watched by 125 million Americans and 723 million other people around the world (Briggs and Burke 2002: 253). The historic relay from the Mare tranquillitatis to Houston, Texas, was arranged by American technologists and astronauts in July 1969 and demonstrates well the great reach of broadcast media. This giant leap of electronic mediation had been facilitated by almost a hundred years of innovation, and the step onto the moon was the final proof of the unnatural capacities of these innovations. As suggested, most people watched and heard the moon landing on television. Radio had been marginalized by television as the main national arena for big events such as this. If Marshall McLuhan’s notion of the global village was ever pertinent, it was during the hours of this transmission, when millions of people were tuned in to the same sounds and images, their movement concerted into shared attention to their receiving apparatus, and a lifetime of memories was set in motion for many different people based on the same sounds and images. The lunar transmission demonstrates perfectly the documentary realism that live broadcasting facilitates – the sense of getting perceptual access to a world somewhere else through technology.

Track 23: NASA: Neil Armstrong on the Moon, 1969 (1:19).

[Heavy electronic noise throughout. Bursts of distortion.]

– Buzz, this is Houston. F2 one-one-sixtieth seconds for shadow photography on the sequence camera.

-OK.

– I’m at the foot of the ladder. The LEM footbeds are only depressed in the surface about one or two inches. Although the surface appears to be very, very fine grained as you get close to it. It’s almost like a powder,

[long pause]

– I’m gonna step off the LEM now.

[long pause]

– It’s one small step for man, one giant leap for mankind.

There is an interesting acoustic architecture to these sounds. Initially, the words were formulated inside a helmet with rather poor acoustics. Since there are no sounds in the vacuum of outer space there is no acoustic space to reproduce and represent. Indeed, the sounds from the moon came exclusively from within Neil Armstrong’s helmet, and were facilitated by microphones and amplifiers brought to the moon by the astronauts.

The audio signals were beamed back to earth across the approximately 240,000 miles of empty space, picked up by satellites orbiting over the Atlantic and the Pacific Oceans, and relayed to the primary reception station at Mission Control in Houston (Goodwin 1999). From Texas they were further relayed to national stations all over the globe, and finally the connections into living-room receivers were made from aerials on mountain tops or tall buildings. This complex process made it possible for Armstrong’s words to be heard in millions of homes. In listening locales the ambience was further determined by the quality of the listeners’ equipment and the individual room acoustics. These details suffice to show that the moon transfer was obviously a non-local event (Aarseth 1993: 24) entirely lacking in geographical limitations.

What about the temporal dimension? This event was live at the point of transmission, but since it was sent from the moon its temporality was if possible even more confused than its ambience. Admittedly, there was an unbroken contact between the events on the moon and the perception of them on planet earth, so in a strictly physical sense one could say that the Armstrong quote took place on the moon and all over the world at the same time. But since for all practical purposes there is no ‘moon time’, the event had to be planned for a suitable ‘earth time’, and since the Americans controlled the presentation they arranged it for US prime time on the evening of 20 July. In Norway it was an early morning event on 21 July, while in some other parts of the world it was a lunchtime or afternoon event (Bastiansen 1994). The crackling sounds from the live broadcast naturally lent the quality of immediate disappearance, relaying a contingent event that could only be experienced right then, by the people living and listening in on that quite memorable Sunday/Monday.

I will also comment on the soundbite rhetoric of Armstrong’s statement (Scheuer 2001). The sounds and images from the lunar event were of course recorded on tape, or else we would not be able to hear them now. The transmission was recorded for documentary purposes, centrally by NASA, nationally by hundreds of radio and television stations, and privately by thousands of listeners. News services all over the world repeated the soundbite ‘A small step for man, a giant leap for mankind’. But Armstrong pronounced it wrong. He should have said: ‘A small step for a man, a giant leap for mankind’, which would have brought out the difference between himself as a mortal individual and the larger society of humans that he represents. Armstrong later claimed that this phrase was something he came up with of his own accord, but the phrase is so appropriate to the occasion that it would probably have been chosen regardless of who actually stepped down first. NASA is the real producer of these words, while Armstrong is a replaceable actor-astronaut.

This NASA case illustrates a critical point made by Murray Schafer ([1977] 1994: 77), namely that a man with a loudspeaker is more imperialistic than one without. Schafer is concerned with the dominance that can be gained by sheer media volume, and there is no doubt that the NASA transmission was a form of imperialism also at the level of sound. The rocket noises and flames and smoke of the huge technological set-up infused the event with extra credibility and authenticity. The machine hum, microphone noises and the other scrambling, crackling, hissing, fluttering disturbances added on the long transport through space made it abundantly clear to audiences across the world that there was an unprecedented technological feat taking place. This means that the poor technical fidelity of the NASA sound feed gave listeners good reason to acknowledge this as a transfer from the moon, and here is a subtle form of noise imperialism. Notice that there are many conspiracy theories about the moon landing in 1969, and there is much evidence and counter-evidence regarding it (Phillips 2001).

The national radio public

The next case takes us back to earth, and helps to clarify radio’s status in the 1960s. At that time listeners could take several features absolutely for granted. There would be a constant offer of programmes from multiple stations, and some stations even ran for twenty-four hours a day. And they would serve up a sound quality of little or no distortion, so that the soundscapes were very comfortable in the home.

Radio journalism consisted, for example, of national sporting events, Saturday night programmes, and news events on a regional and national level. This was a public sphere that people could not choose; they lived in the midst of it whether they liked it or not. Radio’s national public is at the heart of this chapter. It was already on the wane in the 1960s because the pressure from television meant that both listeners and producers were looking for something new, and radio stations started airing pop music and other forms of lighter, more commercial content that would not involve the same collective spirit.

Remember that the BBC had been defining British home communications since the 1920s (Briggs [1961] 2000,1965,1970). Traditionally, radio was at the centre of the home, and the programmes were solemn and serious. Another way of putting it is that public service broadcasting was dominated by a coercive or authoritative address. In contrast, commercial broadcasting in the USA and on the European mainland was dominated by a more inviting or agreeable presentation. In 1967 the BBC changed its overall stance and adopted a more informal delivery than before. Radio was under pressure from television, and in a move to counteract this the BBC reorganized its output into four channels. Radio 1 was the real novelty, because it broadcast nothing but pop music; it was to become the teenage pop music station, and stop the audience leakage to Radio Luxembourg and pirate stations such as Radio Caroline (Crisell 1997: 140; Briggs and Burke 2002: 227; Hendy 2007).

The next case study is a jingle or promo from the very first day of BBC’s Radio 1, with Robin Scott and Tony Blackburn in the studio (Brand and Scannell 1991). It exhibits a cheerful mood, and several musical styles are mixed together with sound effects on top. It demonstrates well the new sound of an old medium.

Track 24: BBC Radio One: Promo, 1967 (0:27).

[American style choir] The voice of Radio 1

just for fun

music [altered]

too much

– And good morning everyone, welcome to the exiting new sound of Radio 1.

[Up-tempo beat, trumpets, dog barks]

Notice that the jingle is produced in the style that I discussed in chapter 7 as ‘flaunting the montage’. This is supposed to sound advanced, playful and slightly reckless. The live show was fast-paced and entertaining, and used all kinds of verbal effects that were really invented by American radio DJs. It had been taken up by Radio Luxembourg and pirate music stations of various types, and now it was also taken up by the BBC.

The sound of this jingle signifies a dramatic shift in the style of radio. During the 1950s and 1960s there were two crucial changes in the media infrastructure of domestic life that meant radio programmes came to be regarded as background noise. As I have already pointed out, television pushed listening habits out of the evening prime time and into the morning and afternoon. Second, the cheap, lightweight transistor radios that had come on the market in the 1950s meant that radio programmes could be listened to in many more everyday situations than before, so that it would not be a big deal to have radio sets in the kitchen, the living room, the bathroom, the bedroom, the car and the office. The fact that, in 1979, 95 per cent of all cars on the road in the USA had radios (Fornatale and Mills 1980: 20) suggests the level of penetration.

Formatted programmes meant that radio was even was more predictable than before. Scheduling consists of making the flow of programming predictable on a hourly basis (Ellis 2000), and stations followed their fixed schedules and seasonal events slavishly. Commercial stations in the USA perfected this strategy long before the monopoly stations in Europe. During prime-time hours, schedules tended to be split into fifteen- or twenty-minute segments, with four of five musical and spoken numbers in each segment (Barnouw 1966: 127), and commercial stations would insert commercial messages between each song. Typically, there was an alternation of vocal and instrumental numbers, which brought some variety to the broadcast output. In this way both variation and predictability were accomplished.

Radio was in a sense becoming less important and more available at the same time. This gave station strategists good reason to orient programming towards a less attentive form of listening. In the USA this strategy is shown clearly in the ‘formula radio’ that was first established in the late 1940s, and mainly played Top 40 pop music (Fornatale and Mills 1980: 13).This is the first inkling of what is generally called ‘format radio’, or ‘wallpaper radio’, and that generally refers to the industrial production of social surfaces in sound. This was a careful construction of inconspicuous programming, and it was all planned so that it would not be reflected on; it engaged the listener by semiconscious invigoration and moods rather than by explicit attention to content.

Kraft Music Hall, 1943

Entertainment was a staple of radio in the early period, and it required quite specific techniques. By the 1930s every programme was expertly staged for sound alone, and the listeners were becoming used to this specialized expressive form. There was no need for imaginative supplements to something that was lacking in the broadcast. Rudolf Arnheim ([1936] 1986:135) states categorically that nothing is lacking in good radio/For the essence of broadcasting consists just in the fact that it alone offers unity by aural means.’ If a programme demanded supplementation by visual imagination it did not properly utilize the medium’s own resources.

The bigger setting of five orchestras, entertainment shows and theatrical performance can be called the resounding studio. Live music was needed at all hours, and small combos, duets, trios and quartets came through the studios by the hour. There were station orchestras of various sizes depending on the size of the studio, often up to twenty musicians (Briggs [1961] 2000: 253).The biggest studios could have an audience present during the show, something that would lend it a live eventfulness lacking in the smaller studios. Foley artistry was an important part of the resounding radio studio. Although the concept comes from the movie industry, it generally refers to the creation of sound effects five in the studio (Mott 1990). Foley artistry was part of many genres, for example episodes, skits, situation comedy, radio drama and dramatized news reports. It had both humoristic and serious potential. In terms of acoustic architecture, the resounding studio shows invariably enacted what Edward Hall (1969) calls the ‘social distance’, and not the ‘intimate distance’.

The next case study takes us back to World War II, just before Christmas 1943.The performer is the great radio star Bing Crosby, with his big-budget production and high-quality sound. He was a successful performer who made films in Hollywood and had shows on national radio, and now he had enrolled as an ideologist in the war effort, with the task of entertaining people. The grandeur of the resounding studio is well demonstrated with this excerpt from NBC’s Kraft Music Hall in 1943.This was a very popular Saturday night show with several million regular listeners, and it was aired from 1933 to 1949 (Wikipedia 2007,’Kraft Music Hall’). During December 1943 the war had turned in the favour of the Allies, and there was more optimism than before. Bing Crosby could sing ‘Happy Holiday’ with enormous resonance in the home.

Track 25: XBC: The Kraft Music Hall with Bing Crosby, 1943 (1:46).

The Kraft Music Hall with Bing Crosby, Trudy Erwin, John Scott Trotter and his orchestra, the music maids and Lee,Yoki, the charioteers, and Bing’s guest for this evening, Paramount star of the Technicolor musical Riding High, Ms Cass Daley. And here’s Bing Crosby:

Happy holiday, happy holiday,

While the merry bells keep ringing

May your ev’ry wish come true.

Happy holiday, happy holiday.

May the calendar keep bringing

Happy holidays to you.

If you’re burdened down with trouble

If your nerves are wearing thin

Pack your load down the road

And come to Hobday Inn.

If the traffic noise affects you

Like a squeaky violin

Kick your cares down the stairs

And come to Holiday Inn.

If you can’t find someone who

Wih set your heart a-whirl

Take a little business to

The home of boy meets girl.

If you’re laid-up with a breakdown

Throw away your vitamin

Don’t get worse, grab your nurse

And come to Holiday Inn.

[Repeat chorus]

We hear a big orchestra which is miked-up very carefully. There are vocals, whistling, a choir, trumpets, guitars, violins, a piano, and not least the wonderful applause of the audience. The melody is fast-paced with snappy lyrics, and the sound of the orchestra is lush and inviting.

The grand, reverberant sound was the signature of entertainment. The studio audience was important both for inspiring the artists to perform naturally and for creating a lively atmosphere in the home. Cantril and Allport point out that the hosts needed the audience in order to settle into the public mood of radio, as was for example the case with comedians: ‘Since radio comedians almost invariably have stage training, they know how to take cues from the audience whose responses they can both see and hear.’ But radio permits of no such feedback, and therefore the social basis of laughter is destroyed and humour itself is put at risk, they argue. This is why the studio audience is so important. It ‘restores to the comedian some of the advantages lost when he forsook the stage for the studio. Nowadays few radio comedians dare work without a studio audience’ (Cantril and Allport [1935] 1986: 222).

Consequently, audience responses were considered an essential part of the studio atmosphere, and microphones were used to build up the laughter and applause. Barnouw (1968: 99) describes how there was typically a warm-up session by one of the comedians so that the audience could be drilled to give the appropriate response. ‘Come folks, I can’t hear you! You can do better than that!’ He held up the sign: “APPLAUSE!” […] Echoing with the roar of laughing crowds, these theatres gave the impression of a continuing vaudeville tradition.

Bing Crosby comes across as a cheerful singer, fully in command of his art and his orchestra. But in a sense it was the live studio audience, represented by the applause after the performance, who were the mam protagonists in this type of show. Cantril and Allport’s listening survey from 1935 suggests that the laughter and applause made the programme more enjoyable for listeners. They felt less foolish when joining in a gaiety already established in the studio, and were ‘drawn still further into the atmosphere of merriment’ (Cantril and Allport [1935] 1986: 223). It is quite clear that the studio audience was important for inspiring the domestic listeners to go along with the show and have fun. Overall, it seems that the reverberant acoustics of the studio influenced the listeners’ reactions in a ‘deep-lying and for the time being quite unconscious’ way (ibid.).

Radio personalities

The next case study is also an entertainment programme, but it is quite unlike The Kraft Music Hall. The show was called ‘The Brains Trust’, and it consisted of a lively dialogue between studio speakers based on questions sent in to the BBC by the listeners. The Britons had been bombed by the Germans for some time, and programmes such as ‘The Brains Trust’ were a wonderful pastime during this ordeal. During the years many speakers appeared, but the core team was the philosopher C. E. M.Joad, the biologist Julian Huxley and the retired naval officer A. B. Campbell. The host was Donald McCullough. We hear them discussing the question ‘What’s the difference between fresh air and a draught?’

Track 26: BBC: The Brains Trust, 1943 (1:06).

– Next question, I’m afraid the last one, from Miss Moore of Southgate. What is the difference between fresh air and a draught? [mild laughter] Are we going to get at this from a philosophical, a medical, a scientific or a physical point of view? Medical? Doctor, could you give us an idea do you think? Fresh air and a draught?

– I think that what is meant … what is behind this question er is … [laughter] … a prejudice. In other words the person really feels that the draught is an evil thing, but a draught really is only a small installment of fresh air. —- – Your idea?

– Surely a draught is fresh air coming through a little hole and impinging upon a little bit of yourself, that’s to say it’s not affecting you equally [mild laughter].

– Gould?

– Surely the distinction is this: It is fresh air when you put the … window down yourself and it is a draught when the person [the words drown in laughter].

-Yes, I think we’ll close on that heavy blend of sociology, philosophy and psychology.

There are four men around the table, and they all carry themselves very consciously in relation to the microphone. There is also a small studio audience that laughs softly at times. The speakers engage in a quite sophisticated verbal artistry, and, although it is produced with the same resounding acoustics as Bing Crosby’s entertainment show, there is little resemblance in the overall mood. The task for each speaker is to sound interesting and smart and funny, and in short to be a radio personality.

Although the conversation must have been rehearsed before going live on the air, the panelists succeeded in creating a good-natured and seemingly spontaneous conversation. They challenge each other with their wit, and create subtle verbal points in the typically English style of understatement. There is lots of sloppy articulation and instability in the pitch and volume of the speakers’ voices, and the conversation is constantly interrupted. This form of talk bears little resemblance to contemporary radio genres such as news reading or lectures, but it certainly resembles everyday speech, and is eminently suited to inspire a sense of sociability among listeners.

The programme’s semi-professional speakers simulate the mood of a dinner party and its enthusiastic conversation. Programmes in this genre would typically have a well-known host and guests of public renown would appear regularly, for example artists, writers, academics, lawyers and doctors. The point is that they became more and more well trained as radio speakers, and could handle verbal and social challenges in public. Such clever speakers could, for example, sound angry or frustrated in just the right way, and increase the entertainment value of the show.

As suggested/The Brains Trust’ provides an example of the emerging radio personality. In 1935 the psychologists Cantril and Allport pointed to the listeners’ tendency to relate to radio speakers as fully comprehensible personalities.

Voices have a way of provoking curiosity, of arousing a train of imagination that will conjure up a substantial and congruent personality to support and harmonize with the disembodied voice. Often the listener goes no further than deciding half-consciously that he either likes or dislikes the voice, but sometimes he gives more definite judgments, a ‘character reading’ of the speaker, as it were, based upon voice alone.

(Cantril and Allport [1935] 1986: 109)

Indeed, listeners identified strongly with the persons behind the voices. Rudoll Arnheim ([1936] 1986: 145) argues that voices familiar from radio intercourse will simply be tansformed into familiar people to the listener, not remain familiar voices of unfamiliar people. In this regard the radio personality was quite unlike the star of Hollywood.

The basic technique of the radio personality was to make himself feel at ease in front of the microphone, and convey this ease to the listeners. But this is a difficult thing to do, Arnheim argues:

Such an atmosphere is most difficult to achieve in broadcasting, and certainly never by means of big things, always by little ones. ‘Stimmung’ (atmosphere) is not got so much by jokes and showing off, not by strenuous efforts to gratify, but far rather by the genial affability of the host who serves his guest in a friendly way without making much fuss.

(Arnheim [1936] 1986: 75)

Arnheim goes on to describe a popular speaker from Berlin radio who spoke about legal matters: ‘He spoke, obviously with a cigar-end in his mouth, without manuscript or notes.’ ‘He stuttered, groped for words which immediately occurred to him, generally inspired ones.’ ‘Law and public-speaking were second nature to him, and it was not in his line to treat them with ceremony. The world became a cozy parlour were he sat and spoke at the microphone.’ Informal ways of speaking were attractive for listeners, and this strategy is at the heart of radio’s sociability to this day.

In 1938 the Radio Times concluded that ‘When all is said and done, broadcasting, with all its elaborate mechanisms, is based on and aimed at, the home’ (quoted in Scannell and Cardiff 1991: 374). And during the 1930s broadcasting indeed gained a remarkable presence in the home. Raymond Williams ([1975] 1990: 26ff.) has made a classic statement about the influence of radio in the home. He argues that the transformation from wireless telegraphy to broadcasting led to what he calls a ‘mobile privatization’. On the one hand people gained an easy, almost non-geographic access to the marketplace of ideas, where the difference between centre and periphery was quite irrelevant. On the other hand the family home became an important centre of cultural attention, and public matters could more readily be cultivated in a private setting. Cheap receivers were a significant index of this modern condition with its novel social identifications.

A novel cultural technique can be observed among domestic listeners. They were acquiring the conventions of a documentary realism especially created for the family home. It was supposed to be a collective experience where the listeners could not expect their most individualistic interests or desires to be fulfilled. Cantril and Allport ([1935] 1986: 22-3) pointed out that: ‘If I am to enjoy my radio, I must adjust my personal taste to the program that most nearly approximates it.’The listeners had to adapt their personal interests to one of the common social moulds that radio offered. ‘If I insist on remaining an individualist, I shall dislike nearly all radio programs’, Cantril and Allport argued.

Nervous news reading, 1941

The serious journalist’s voice from a news studio could carry great weight in the public, especially in times of political tension and war. Most often the journalist read from the script, and the stations favoured medium-pitched male speakers with good diction and high stress tolerance. There were new techniques to be learnt for the news readers.

Clearly, the voice had to be able to convey a sense of authority. ‘I have a job if my voice is all right’, the journalist William L. Shirer wrote in 1937.’Who ever heard of an adult with no pretenses to being a singer or any other kind of artist being dependent for a good, interesting job on his voice?’ (quoted in Barnouw 1968: 77). Arnheim ([1936] 1986: 92) complains about people who are too loud for broadcasting, and who make themselves ‘spatially noticeable’ by moving about in the studio or turning their head away from the microphone while speaking. This points to the professional importance of voice control, combining an ability to become transparent with a sonorous, authoritative and physiologically attractive voice. No real affect display, no happiness, anger, sadness or interest were allowed. Erik Barnouw (1968: 150) says that even while uttering words that involved the death of thousands the newsreader should only ‘display a tenth of the emotion that a broadcaster does when describing a prizefight’. In the late 1930s the BBC selected three journalists from the newsroom as presenters to build up experience in the specialized task of reading the news.

‘Without going into personalities, it had become obvious that some announcers were temperamentally very much better suited to tackling news at a moment of crisis than others’

(Scannell and Cardiff 1991: 131).

It was soon acknowledged that the speakers should not sound too detached. A news event was easier to grasp if there was a sense that the speaker reacted to it in a natural way, or reacted like the listener imagined they might do. These emotions would, however, be discernible only through very modest inflections of the voice, and not through dramatic effect. Very little emotion was needed before the listener noticed it.

The next case study is from the tense period during which the USA entered World War II (see Johnson 1999:778ff. for the rail story). On 7 December, 1941 the entire US population listened to the dramatic news on networkradio. Hour by hour the drama unfolded, as reports about the US response to the Japanese attacks on Pearl Harbor were read out. At the Washington desk of CBS Albert Warner analysed the White House reactions to the bombings (Douglas 1999: 188). As the news keeps pouring in, presumably on telex, the news team continuously updates the script. Here is a portion of the breaking news from that fateful day.

Track 27: CBS: Breaking News, 1941 (1:34).

– Although officials in Washington are silent on what would be the definite consequences of a Japanese attack on Hawaii or an attack on Thailand, there are indications of … what … is being considered … and the steps which may be taken this very afternoon. The first would be a severance of diplomatic relations with Tokyo. An immediate naval blockade, in which the American navy would take a leading part along with British units, is the other probability. Both these steps could be taken by the president on his own executive authority, but an effective naval blockade of course could not continue long without hostilities. As a matter of fact, according to the president’s announcement those hostilities are already under way with the Japanese attack on Pearl Harbor. And just now comes the word … from the president’s office that a second air attack has been reported on army and navy bases in Manila. …Thus we have official announcements from the White House that Japanese airplanes have attacked … Pearl Harbor in Hawaii and have now attacked army and navy bases in Manila, [pause] We return you now to New York and will give you later information as it comes along from the White House. Return you now to New York.

Warner is audibly stressed. He returns us to New York for much the same reason that the 1010 WINS anchor went live to CNN during the terror attacks on the Twin Towers (chapter 4). At that point it is impossible for him to talk coherently. Warner was already a little shaken before the reports about another air attack reached the studio. He continues reading as before, but we can hear how his voice trembles, and how he struggles to continue reading his script in a normal way as he attends to the new information. He discloses the new developments in bursts of words, with short strained pauses in between.

My point is that Warner’s staccato speech did not rum the informational function of the bulletin; on the contrary, it reinforced it because in effect it carried an emotion that the listeners were likely to feel themselves. Albert Warner demonstrates that loss of control may inspire a stronger sense of credibility than ordinarily, as the dialogues from 1010 WINS also demonstrate. Had Warner let his voice tremble on purpose, and had it been done by all the news readers as a regular trick, there would have been less intensity to the performance. The excerpt shows that, in radio, tiny deviations from the norms of speech may induce heightened feelings of trustworthiness.

The identity of newsreaders and public speakers in general was felt to be important for the credibility of the messages. The invasion of Norway in 1940 forced Norwegian broadcasting to take place on short wave from the BBC in London. After a period of using British-Norwegian speakers, a Norwegian politician argued that ‘a familiar Norwegian voice would improve the broadcasts both qualitatively and psychologically’ (quoted in Dahl 1999b: 124). At the outbreak of the war the BBC instructed bulletin readers to identify themselves by name before reading the news (Schlesinger 1992: 30). Rather than being anonymous voices, they were to become individual persons.

The international conflicts made national identification more important than before. The major division was between the Allies and the Axis powers, that is, the ‘Big Three’ – the USA, Great Britain and the Soviet Union – versus Germany, Italy and Japan. During the 1930s and 1940s the news bulletins that filled the air on short and medium wave would be highly contradictory. The national broadcasting stations were part of an ideological struggle, and were used instrumentally for various political purposes. For example, the Reichsrundfunk in Germany and the BBC in Great Britain had strikingly different strategies and often contradictory claims about factual matters. The listener’s sense of truth and relevance would be influenced by their national sympathies and antipathies. Clearly the American subject would be more likely to believe the American stations than the Japanese broadcasts in English. Consequently, the news was likely to be felt as trustworthy if there was consensus among the ‘station and the individual listener, and likely to be felt as in doubt or simply false if there was no such consensus.